I have written about AI Agents in the fleet management space before, and now I’ve built one myself. In just a few hours with n8n and modern LLMs, I created a functional agent that can query fleet data, make (reasonably 😉) intelligent decisions, and even direct my demo-fleet via text-messages. This post shares what it can do, how it’s implemented, and most importantly, the practical lessons learned that could save you time in your own implementations.

What it Does

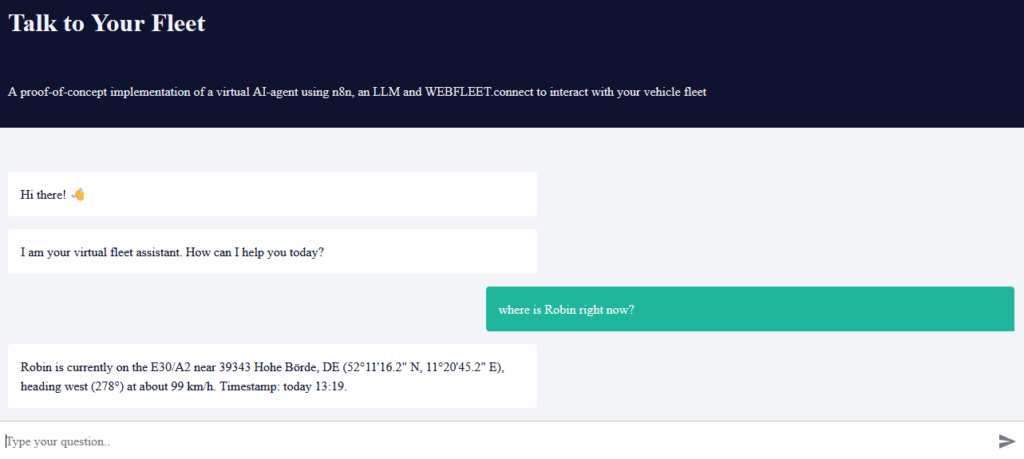

From the outside, like it is common in our days, the agent exposes a chat interface. Let’s start with a simple question:

I know this conversation is hard to read as an image – just wanted to show you how it looks in a browser. From now on, I will show the conversations in a more accessible way as text. Content-wise, as you can see, the agent knows about my vehicles and drivers – and is able to understand associations between them. It is also able to guess which driver I mean just by the first name – not surprising if you have played with LLMs. The answer is correct, by the way – whenever this is not the case, I will mention it for the rest of this article 😂.

Let’s try something more difficult next.

Last update: 28 Aug 2025, 16:09.

Runner-up: “(object #0218)”, also in Lüneburg at roughly the same distance.

Want me to send directions or a message to the driver?

Last known location: Norderstedt — roughly 23 km southeast of Elmshorn.

Last update was 14 Feb 2022, 20:19, so this is outdated.

Driver on record then: Max Mustermann (#666).

Want me to try to refresh its current location or send the driver a message?

But 010’s data is from Feb 2022, so it may not be current.

Want me to fetch its live location and re-check which vehicle is closest right now?

This one did contain some surprises for me. I did not expect the system to be able to correctly assess geographic distances without me supplying a tool that it can use to calculate them – especially for a small German city like “Elmshorn” – and no, you don’t need to look that up, the answers are correct and I did not supply an according tool. I also did not expect that it did apparently take the other vehicle (“object 010”) into account – but decide on its own to ignore it. I did not explicitly instruct the LLM to ignore vehicles with outdated information – GPT-5 did so on its own. Older models are not doing that, so your results with them may vary.

I can now hear some of you arguing: “But wait, Michael – so far everything you have shown us is just a simple chatbot, augmented with vehicle data. This is NOT an agent – there is no action here on behalf of the user!”. And you would be right with what I have shown you so far. To fix that, here is another interaction:

Text: “Hallo Max, bitte schließen Sie Ihren aktuellen Auftrag zügig ab und fahren Sie anschließend schnellstmöglich zum nächsten Termin bei Kunde Reimann. Vielen Dank!”

Möchtest du eine Zustellbestätigung oder die aktuelle Position von Objekt 010 prüfen?

Text sent (in German): “Hallo Max, bitte schließen Sie Ihren aktuellen Auftrag zügig ab und fahren Sie anschließend schnellstmöglich zum nächsten Termin bei Kunden Reimann. Vielen Dank!”

Would you like a delivery status or to check Max’s current position?

The message did arrive with driver Max, as the agent did send the message via the WEBFLEET.connect API. Some fleet management providers have recently started offering translations to drivers languages as a feature – not sure how high the willingness to pay for this from customers will be with the opportunities they have for free on translations in our days.

Also, I have no idea why the model chose to answer in German here initially – both GPT-5 and Gemini 2.5 Flash did that – while Anthropic’s Claude Sonnet answered in English immediately and did not get mixed up. Probably something I could solve by using a stronger prompt, did not really explore this in further detail, as after all I have the luxury of only creating proof-of-concepts here, not finished and 100% tested products 😉.

There is something still missing to get to the full agent-definition I have shared in my previous article – and that is the “making decisions” part – at least if you ignore the decision from above to ignore the vehicle that was obviously out of order. Take a look at this conversation:

Backup option at similar distance: Tony Polster, also in Lüneburg.

Please share:

- Exact job address in Schwerin (street/number)

- Onsite contact name/phone

- Any access notes and urgency/time window

Lots of context filled by the model, decisions made which employee to pick, that a backup is needed, how to reach them, how to deal with the lack of information – along with actions taken. Impressive – for such a simple implementation. Talking about implementation – let’s loose a few words about it.

Some Implementation Details

Don’t worry, I am not going to bore you with a lot of details about how I have implemented the agent. But it helps to know some implementation details to put things into perspective and understand the lessons which are coming up next.

The complete tech stack for this proof-of-concept includes:

- n8n: Low-code workflow automation platform

- OpenRouter: Unified API for accessing multiple LLM providers

- WEBFLEET.Connect API: Fleet management data source

- Various LLMs tested: GPT-5, Claude Sonnet 4, Gemini 2.5 Pro/Flash, Deepseek v3

The framework that the agent is built in is n8n. N8n is a low-code platform that claims to be able to “Increase efficiency across your organization by transforming error-prone, manual processes into secure, automated workflows at scale – 10X faster than conventional code.” I am not an expert at the framework, in fact I have only played around with it a couple of hours – but I can say it is useful and gets the job done. I don’t have a lot of experience using low-code platforms, but this one is easy to setup, understand, has countless examples online on how to use it and integrate it with all kinds of systems – and has an excellent integration with LLM’s and agents. In short – it makes it easy to get started and build something quickly, which is what I wanted.

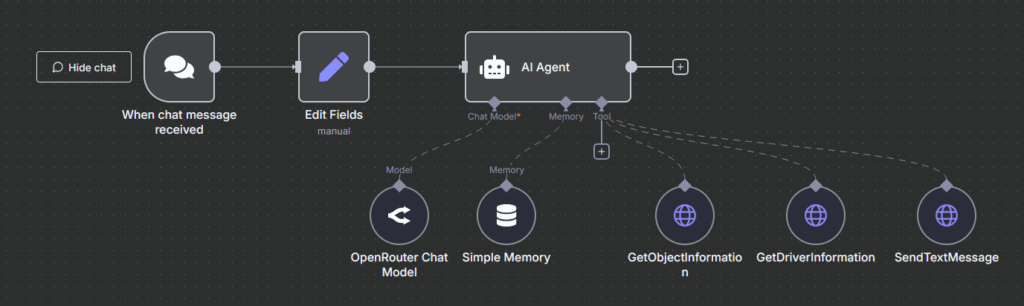

If you want an overview of how it the implementation looks like, here it is:

Not too complicated, is it? This is how easy it is to create a simple agent with a chat-interface, an LLM in the background and 3 tools to access vehicle data. Took me a couple of hours to get started (mostly with n8n), a couple more to venture down a wrong (and too complicated) path, then a final couple hours more to create this implementation as a proof-of-concept. I have used different large language models to test the implementation – and there are some differences between them (see below in the learnings-section). The conversations in the screenshots were generated using GPT-5 from OpenAI – but also lesser and cheaper models work well, for example Deepseek v3, the Anthropic-models or Google’s Gemini 2.5 models.

I am going to spare you all of the prompting going on, let’s just say I have a rather large system prompt (5000 characters) and also some prompts for the actual tools that implement the API-calls to Webfleet’s system. There are no big secrets in there or anything – it is just a verbose description of how the agent should work.

But enough of the foreword, let’s move on to the promised lessons.

Lessons Learned

I have learned a lot from this exercise and here are the things worth sharing.

First of all, keeping it simple helps a lot. My first architecture was way too complicated, as I tried to have the language model create full API-requests itself. This version now has me specifying the tools that the agents can use (that is the bubbles on the bottom right of the implementation-diagram above), including the API-requests in the right format – and the LLM has a description in its prompt and only calls those tools. Way easier to setup and maintain – and has the added benefit that I know exactly what the model is doing and that it cannot mess things up. I can control which API’s the model can call, I can debug what it is doing and if a request does not go through – I can fix it myself. This is less powerful than what I originally had in mind – but it works and it is way easier to setup and maintain.

I also noticed that hallucinations are still a thing, especially for less powerful models. Gemini Flash 2.5 would happily hallucinate an answer – while Gemini 2.5 Pro used a tool instead to actually get the right answer from the WEBFLEET-API. Talking about tool usage: also there, LLM-selection makes a difference. I did not get Gemini 2.5 Flash to call two different tools in sequence to answer a question – but Gemini 2.5 Pro did. Same for OpenAI’s and Anthropic’s current models – no problems with tool usage there. Also, if you like cheap / free models: Deepseek v3 worked just as nicely. So there is a bit of trial and error involved in finding the best LLM for the job – especially since the price-points per request are different per LLM and at least I wanted to find the one with the best cost/benefit ratio for my use-case.

Talking about trying different models – OpenRouter is big time-saver there. One service, one account – and access to all the different state-of-the-art models – from paid to free. Excellent to try things out quickly. And is seamlessly integrated with n8n. Switching between different LLM’s from different providers is literally two clicks, without the need to setup accounts and API-keys with all providers – turned out to be a big time-saver for me.

Another learning that is probably well-known to everyone working with LLM’s a lot: don’t forget to set sampling temperature to 0 when using LLM’s in an agentic AI context, you want repeatability, rather than novelty. Of course also something to play around with for your use-case, but for me having the same results from the LLM as much as possible when giving it the same inputs – is worth something.

You want to give the n8n agent model a memory – so it remembers the conversation history with you like it would if you talk to Chat-GPT via the OpenAI interface. N8n makes that really easy with their SimpleMemory – but also provides other options if you want to save history permanently. However – for testing, you don’t necessarily want the model to remember previous answers and refer to them. One easy way to do that in n8n is – not configuring the agent’s memory in the first place. This way, it has no context of previous questions and answers – which makes testing nice and easy. Alternatively, you can also empty the memory of your agent by refreshing the workflow-page. But don’t forget to save before you press F5 – been there, done that 😉.

Next up, counting is still a challenge for many models. A simple question like “how many vehicles do I have in my fleet” is enough to throw the less powerful ones off. This is fixable by using more powerful models – or by implementing a counting tool for the LLM to use.

The models will also happily guess request parameters, then check the result and see if it gets them further. So don’t expect a setup like this to minimize API-calls 😉. With a bit more context given to the model, this is surely solvable. If Webfleet (or any other telematics data provider) had an MCP-server, this could also be improved. For now and for this simple model, I have helped myself by asking the model to just refrain from inventing parameters – and if it is unsure about the specific parameter, just call the tool without any parameters. This has already improved things significantly – but did not stop this waste of API-calls completely. For production use, implementing rate limiting and cost tracking per conversation would be essential, especially when using premium models like GPT-5. Consider setting up alerts for excessive API usage patterns that might indicate the model is stuck in a loop. The good thing is: the models are usually smart enough to recognize they messed up and try again with better results.

Also interesting for me to learn – LLMs can calculate distances – somehow 😉. This was actually a surprise to me, as I had thought that for a question like “Which vehicle is closest to Elmshorn?” – I would need to provide a tool that calculates geographic distances. But that wasn’t necessary – that knowledge was apparently embedded in the language model and did not need any further refinement. Also there, your experience may vary per geography and model, though – so after all you might need to provide it a getDistance-Tool, especially if you want your model to include actual travel times including real-time traffic. Should not be a big problem, though, as lots of location-based services are available that do this for you (e.g. from TomTom – oh nice, they even have their own MCP-server now, that should make things easy! 😂 ).

Last but not least – I have the following sentence in my system prompt for the agent: “If you can’t help with something, suggest alternatives or redirect to your available capabilities”. The models are always very helpful and forthcoming as a result – but they will also happily suggest things they are not capable to do. You can see it in the conversations above, suggestions like “Would you want me to use a specific Elmshorn address to refine the distance, or set an alert if a vehicle enters Elmshorn?”. Yes, it is possible to define areas with notifications in Webfleet – but none of the tools I have given you actually allows you to do that, my dear fleet assistant 😉. Recognizing its own limitations is obviously something that also needs to be trained.

Next Steps

This article is already long enough, so here are some possible next steps that I could take this forward with:

- Add more tools, add more capabilities. I don’t actually know if I will do that, unless there is demand for it somehow

- This implementation works – but does fell a bit clunky. We don’t really have an API-description in the tool, the agent calls the tools without a lot of knowledge of the actual API, more or less just by guessing and filtering results. For that, it works surprisingly well – but a more standardized solution now exists: MCP (Model Context Protocol) by Anthropic, which provides a standard way for LLMs to interact with external data sources and tools. Think of it as a universal adapter between AI models and APIs. Unfortunately, none of the fleet management providers I found has an MCP server ready – and building one myself is probably not worth the effort. But playing around with MCP is definitely something I will do.

- Next to the “Fleet AI assistant chatbot with agent-abilities”-approach I have tried here, I could venture into more specialized agents that provide real value by monitoring my fleet. For example, create an agent that monitors a vehicle fleet for suspicious activity (like vehicle theft), detecting unusual routes or after-hours usage. Or that analyzes daily driving patterns to identify risky behaviors (harsh braking, speeding) and automatically generates personalized coaching tips for drivers. Another valuable use case: proactive maintenance scheduling based on actual usage patterns rather than fixed intervals. As mentioned in my previous article on this topic – the possibilities are endless in this space.

- In order to move this from a proof-of-concept to a production-ready tool, a lot more work would be needed. More functionality, fine-tuning of the prompts, better error-handling, a focus on security, data privacy – making sure that the agent cannot break out of its role and starts insulting people – or spamming the APIs it has access to, all of this needs to be handled. So if you are wondering why your fleet management provider of choice has not released an agent, yet – well, they have to take care about all of those points for ALL of their customers, while I can just call it a proof-of-concept and push those problems to the back of my head for now 😂.

Closing Remarks

Let me close this with a couple of remarks. When I started building this, I would have never thought you can build something like this – in a relatively short time and that with access to so few tools that I provided (really – just 3 API-calls to the WEBFLEET.connect interface), the agent would be able to interact with my fleet in so many interesting ways. That was definitely a surprise for me, those models have become really adept at filling gaps.

Second, if you own a fleet and are interested in using a tool like this – get in touch and we can talk, as I would only put more effort into this tool if people are actually interested in using it.

And if you are a fleet-management or TMS-provider and want to chat about the possibilities, my experiences or want help with your own implementation – also don’t be shy and contact me and I am sure we can work something out together.